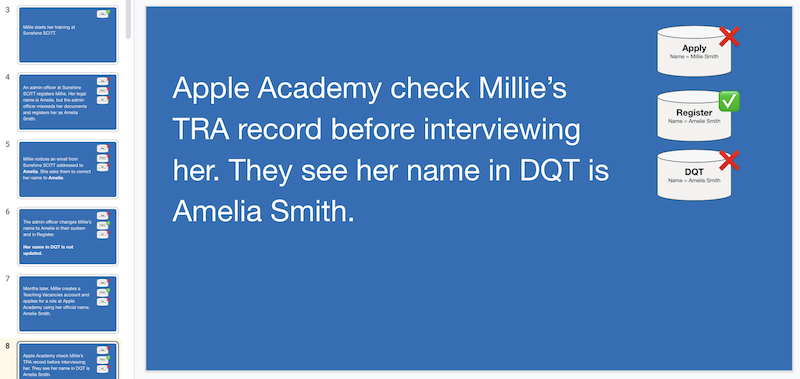

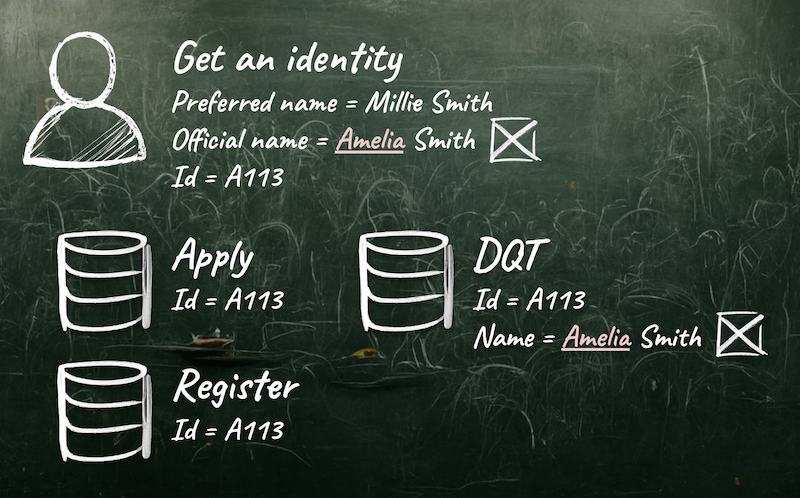

On a project for the Department for Education (DfE) we needed a captivating way of showing the benefits of a shared identity service. We needed a narrative.

We chose to tell a story of a young teacher who goes through the DfE collection of digital services as her career progresses.

She applies for teacher training, completes her training, applies for jobs, completes her induction training, takes on extra professional qualification courses, and so on.

At the same time, two things happen:

- her name is mistakenly entered incorrectly into a system

- she later gets married and changes her name

The story shows what happens when names get mixed up, and how these problems could be avoided with a joined up identity service.

When things got silly for Millie

We called the teacher Millie, and our content designer Emma added the rhyme. I was asked to add some visuals to make the story compelling.

‘Silly for Millie’ screamed ‘children’s story book’ to me.

I’d been experimenting with AI image tools for a few weeks, and I felt confident I could create a consistent storybook style using them.

The illustrated story

Midjourney and DALL·E

In July I was given access to DALL·E (after joining the waitlist in April), an AI system by OpenAI that can create realistic images and art from a description.

I discovered Midjourney shortly after, a small independent research lab with its own product.

Both take prompts and make images from them.

Midjourney

Midjourney is expressive, detailed and beautiful when it comes to making art. It’s also awkward to get what you want if you’re trying to escape their house aesthetic.

You can set an aspect ratio, reuse a seed and use another image as a reference.

Prompt: A path going along a hill, left to right

DALL·E

DALL·E is more vague with art, but can produce astounding realism. It doesn’t really have a house aesthetic, unless you count the generation artefacts that appear in complex images (most notably in faces).

You can produce anything with it more easily, but images must be square, and sometimes that doesn’t fit the prompt. You frequently get awkward crops being generated.

DALL·E has a powerful in-painting feature. You can erase parts of an image and refill them with a prompt. This has led users to make ‘uncrops’ of famous artworks like the Mona Lisa.

Prompt: a resplendent quetzal bird in the rainforest with tropical leaves, in an art deco style, with greens, reds and warm pastels

Bringing Millie to life using AI

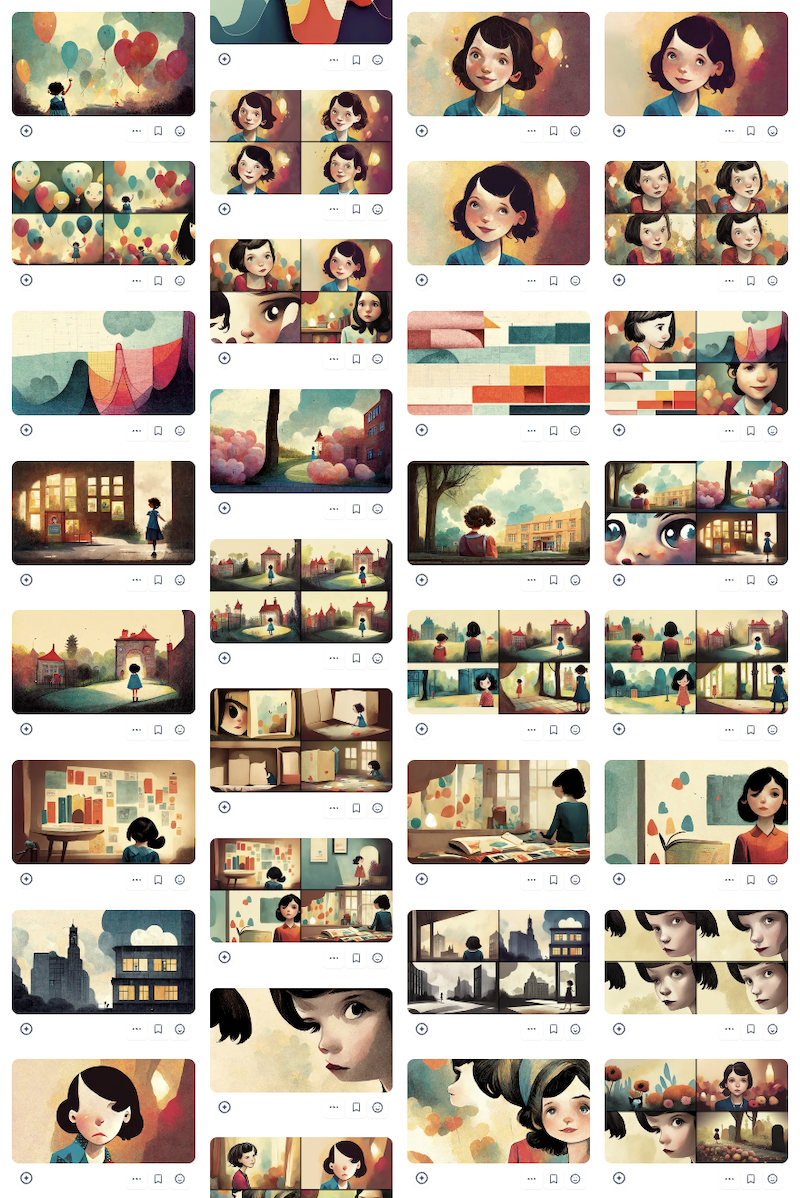

I used Midjourney for the artwork.

Its generations were invariably artistic and consistent. DALL·E was more hit and miss – sometimes I’d get a storybook image, other times it would be more like a child’s drawing or a doodle in MS Paint (an example of a miss).

Midjourney gave me the right tools to make the job easier.

Aspect ratio

I could specify a 16:9 aspect ratio for images, meaning they’d perfectly fill a slide in Google Slides, -- ar 16:9.

Maintaining consistency

From the beginning, Midjourney seemed to have a consistent, somewhat French-looking, representation of what Millie should look like.

Each prompt would produce a picture of her with dark brown hair and a slight parting (left or right, but the image could be flipped). Sometimes Millie would be an adult, sometimes a child, I’d need to add “teacher” to prompts to increase her age.

I was able to enforce a consistent style by:

- always reusing the phrase “when things got silly for Millie” in each prompt

- using the same reference image as a basis for each prompt (with an increased weighting too,

--iw 1) - reusing the same random seed, so each generation started from the same point,

--seed 1234

An example prompt:

/imagine prompt: [image URL] --iw 1 when things got silly for millie, [a college building with a spring sunrise, bright and early, very light, warm glow, light blues and greens] --ar 16:9 --seed 1234

The description would change, for example:

celebration with balloons, everyone is happymillie celebratesthe front of a schoolstanding at the school gates, a big old school and playground

The cost of making images

I could experiment without limits within a standard Midjourney subscription, which is $30 per month.

Results were consistent but sometimes had strange artefacts, missing or unconnected limbs, or some strange upscaling issue, but I could try and try again to get it perfect.

With DALL·E every prompt costs about 12 pence ($15 for 115 prompts), and I couldn’t maintain consistency. I would need to use more prompts at a higher cost per-go.

There was a lot of iteration, and hundreds of prompts.

An example of prompt iteration

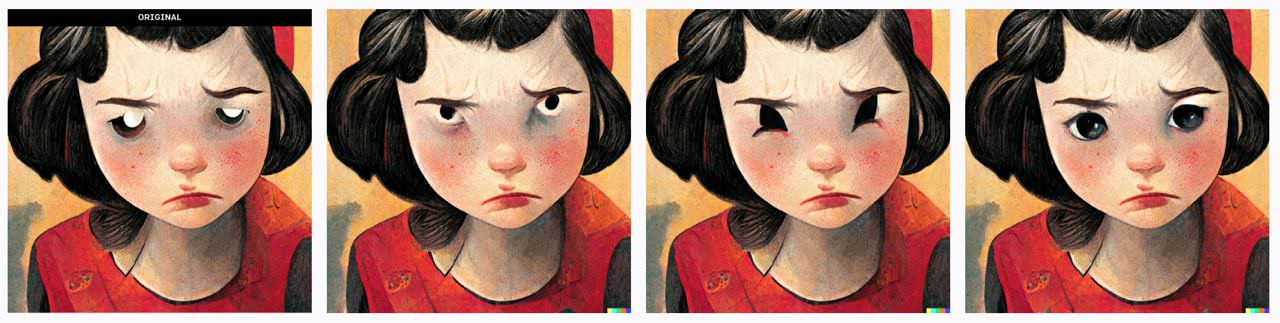

When I was trying to express that Millie was too busy and tired to prove her name change, I tried:

millie is very very busymillie, a teacher, is tired, she sits with her head on her desk(for this I got a disembodied head on a desk, not macabre, just literal)millie, a teacher, is tiredmillie is tiredmillie has many arms and is trying to do too much, hands waving everywhere

I got a bit exasperated and tried millie goes to a funeral or millie gets a divorce, but these looked more like grief than tiredness.

In the end, what worked, for ‘busy and tired’, came from the prompt:

millie is angry

Then for when I needed more anger, I used:

millie gets very angry and red faced, she is frustrated as everything is going wrong

Icons and post-generation edits

DALL·E was invaluable for two specific parts of the process:

- Fixing Midjourney generation issues

- Creating icons for the blackboard slides

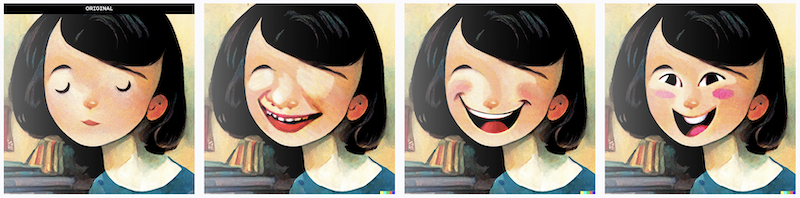

Fixing Midjourney with DALL·E

Where Midjourney had given an almost perfect image, but a part of it was very obviously wrong, I could take a crop and use DALL·E in-painting.

This was usually a problem with eyes, or oddly toothy grins.

In-painting tips

Typically you need to erase more of an image than you’d expect – it’s a fine balance between:

- keeping enough of the image, to maintain the look and feel of the original, giving enough for DALL·E to match against

- removing enough so that DALL·E has adequate space to fill the gaps with something realistic. For example, if you wanted to add an object, that object will have an effect on things around it, like reflections or shadows, and you need to give DALL·E space to account for that.

In-painting could also wildly miss the mark too:

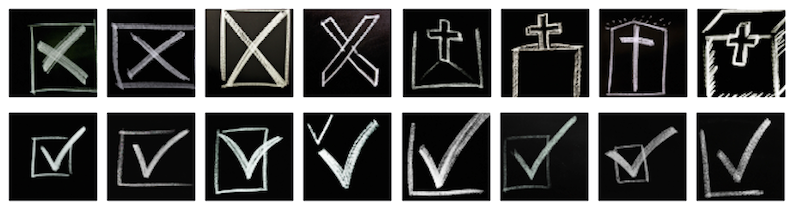

Using DALL·E for icons

DALL·E is excellent at making icons. It got these right first time.

In the slides I use a blackboard analogy to show the various data states.

I used prompts like:

a chalk drawing of a database on black

a chalk drawing of a user profile picture, on black

a chalk drawing of a white checkmark, on black

(note the ‘cross’ which became an ‘X’)

These were finessed in Photoshop to increase their brightness and contrast against the blackboard:

How long it took

The graphics for this story – 27 separate images selected and edited from roughly 240 prompts – took about a day to produce.

Without AI I would never have created anything like this. I may have tried to use stock images to convey emotion, or icons for databases, but the results wouldn’t have had nearly the same impact.

Just two months ago it wouldn’t have been made like this.

This story is doing its job better than imagined.